I'm K. Koyal, an introverted third-year B.Tech student. In this generation, people like to identify as an introvert and prefer to chat with bots like Siri, Alexa, Google Assistant etc.

These are nothing but the form of AGI (Artificial General Intelligence).

Definition of AGI

AGI is often called strong AI and strong AI isn't about physical powers but rather the ability to adapt, plan and learn.

Well, AGI doesn't just mean the robots or robots plus the brain it is more than that, robots plus the brain along with consciousness. Consciousness is the key factor, that we haven't achieved so far.

We already encounter plenty of examples of AI in day-to-day life like using Amazon to find items in natural language, the famous ad - "Silbatta ko english me kya kehte hai?" you all remember that right? Well, it is a grinding stone or the GPS that dynamically finds your path home.

Did I just use AI all the time while referring to AGI, so let's just see the difference between these two.

AI vs AGI

AGI (Artificial General Intelligence) and AI (Artificial Intelligence) are both forms of artificial intelligence, but they differ in their capabilities and limitations.

The key difference between Artificial General Intelligence (AGI) and Artificial Intelligence (AI) is their level of generalization.

AI is designed to perform specific tasks, while AGI is designed to be versatile.

Let's take an example when you play an online chess game with a computer that algorithm is only designed to win the game against you and not to do other tasks like it cannot be able to find the Goa trip under the best budget but the cool thing is that if it is AGI model it will be able to do so.

Interesting right?✨🌟

I hope now you won't get confused between AI and AGI like me before.

Ethics of creating AGI

Now you know what AGI is let's see what guidelines you must follow while creating the AGI model.

Fair and Bias: The model must be fair and unbiased and provide accurate results in all situations.

Trust and Transparency: The code must be open-sourced and known to the public so that it must be trusted by everyone.

Accountability: There must be someone who must be accountable for the model, if things go wrong he/she might be able to handle the situation.

Social Benefits: The model must be socially beneficial, it must be used for the welfare of society.

Privacy and Security: The model must be threat-proof as the data it is processing to learn so it must be secure from malicious hackers.

Technologies used in the development of AGI

Machine Learning: It is a key technology used in creating AGI because it allows machines to learn from experience and data, and adapt to new situations without being explicitly programmed.

Natural Language Processing (NLP): NLP allows machines to understand and process human language, which is essential for building intelligent systems that can interact with humans naturally and intuitively. It involves techniques such as parsing, sentiment analysis, language translation, and text-to-speech conversion.

Neural Network: Neural networks are a type of machine learning algorithm that is composed of layers of interconnected nodes, or neurons, that are designed to simulate the behavior of biological neurons to model complex and non-linear relationships between data.

Cognitive Science: It provides a theoretical foundation for understanding human cognition. By studying the way that humans think, learn and reason, cognitive science can develop theories and models of human intelligence that can be used to guide the development of AGI systems.

Deep Learning: Deep learning is particularly effective in areas such as image recognition, speech recognition, and natural language processing, all of which are important components of AGI. Using this, AGI can learn to understand and interact with the world in a way that is similar to how humans do.

Reinforcement Learning: It is a type of machine learning that is particularly useful in creating AGI, which is a type of AI that can perform any intellectual task that a human can, across a wide range of domains. Reinforcement learning is used in creating AGI because it enables machines to learn through trial and error and feedback from the environment, much like humans do.

Robotics: It is used in areas such as manufacturing, logistics, and healthcare, where machines can perform tasks that are repetitive, dangerous, or beyond the capabilities of humans. By using robotics in these domains, AGI can learn to perform tasks that are similar to those performed by humans, but with greater speed, precision, and safety.

The Alberta Plan for AI Research 📃

This research plan was published by DeepMind's Richard S. Sutton, Michael H. Bowling and Patrick M. Pilarski all of whom are well-established researchers.

How this research plan is different?

It is similar to the things that lots of people are doing today but it focuses on the importance of what's important. That's what makes it different from others and focuses on continual learning.

This research paper tells us what professors approach towards the AGI.

Distinguishing Feature💫

Emphasis on ordinary experience:

This means we cannot use special training sets, human assistance or access to the internal structure of the world.

These will not scale up with computational resources that's why these are out.

Temporal uniformity:

If information is provided it must be the same for every time stamp.

our focus on temporal uniformity leads us to interest in a non-stationary, continuing environment for continual learning and meta-learning.

Cognizance of computational consideration:

Moree's law tells us that the number of transistors on a microchip doubles about every two years, though the cost of computers is halved.

Solution of algorithm that best fits with computational resources.

Awareness of another intelligent agent:

- Agent must be aware that other intelligent agents might be there in the environment and be able to interact and learn if required.

Roadmap to an AI prototype

This is also part of the Research Plan of Alberta's DeepMind.

Representation I: Continual supervised learning with given features.

Find the best algorithm

Faster, more robust and continue over some time.

Meta-learning

Normalization of features.

Representation II: Supervised feature finding.

Create new feature

Adjust neurons to get the optimal result

Init, backdrop, utility, drop, re-init (meta-learning)

Prediction I: Continual Generalized Value Function (GVF) prediction learning.

Working with RL

How good a state is by the rewards it collected till now.

Control I: Continual actor-critic control.

- We are not interacting with the environment, we are just predicting what was happening

Prediction II: Average-reward GVF learning.

- Maximize the average reward

Control II: Continuing control problems.

Model-free RL agent is ready

Combine and convert all the algorithms into continual learning

7. Planning I: Planning with average reward.

- Maximizing average reward using a model or finding a new model which is unsolved

8. Prototype-AI I: One-step model-based RL with continual function approximation.

Recursive update function

Predicting the next step, update based on certain visions

9. Planning II: Search control and exploration.

The search would be done

What we are missing to this date and fill the gaps

Priories things

10. Prototype-AI II: The STOMP Progression

Subtask, Option Model, and planning

Like if you want to change the direction of the car you use muscle power to move the steering likewise model needs to be trained on which part needs to be moved.

11. Prototype-AI III: Oak.

- Set of options ( a. fish, b. use a computer)

12. Prototype-IA: Intelligence amplification.

The model should do things very well.

Agent-to-agent interaction

Multiplicative Scaling

I would recommend you watch the video for a better understanding of these topics.

Development in the field of AGI so far

In this article, we are going to look at some popular AGIs that are created so far.

ChatGPT: The latest version GPT4, was officially launched on March 13, 2023, with a paid subscription adding users access to the chatGPT4 tool. It uses text, images, videos and audio to take input and produce results accordingly.

Tip: GPT4 can be used for free on Microsoft Bing chat.

Bard: Google's Bard is the early experiment in the field of AI. It was developed as a direct response to the rise of Open AI's chatGPT and was released in a limited capacity in March 2023 to lukewarm responses.

Tip: Go to bard.google.com at the top right, and select sign in.

Sophia: This social humanoid robot can speak 9 Indian languages. It is capable of imitating human gestures and facial expressions. It is the first humanoid robot that got citizenship in Saudi Arabia.

Tip: You can order Little Sophia, it is crafted by the same renowned developers, engineers, roboticists and AI scientists that created Sophia the robot.

Limitations

These popular models don't yet achieve AGI fully and lack things let's discuss that.

ChatGPT: It still won't be able to tell answers including today, tomorrow, and yesterday in the questions and ask for the details of the content. This is what I've figured out on my own chat experience with GPT4.

Google's Bard: Bard's error wiped $100bn (£82bn) off Google's parent company Alphabet (GOOGL) as shares plunged by 7.44% on Wednesday.

Sophia: Sophia appears to either deliver scripted answers to set questions or works in simple chatbot mode where keywords trigger language segments, sometimes inappropriate and sometimes there is just silence.

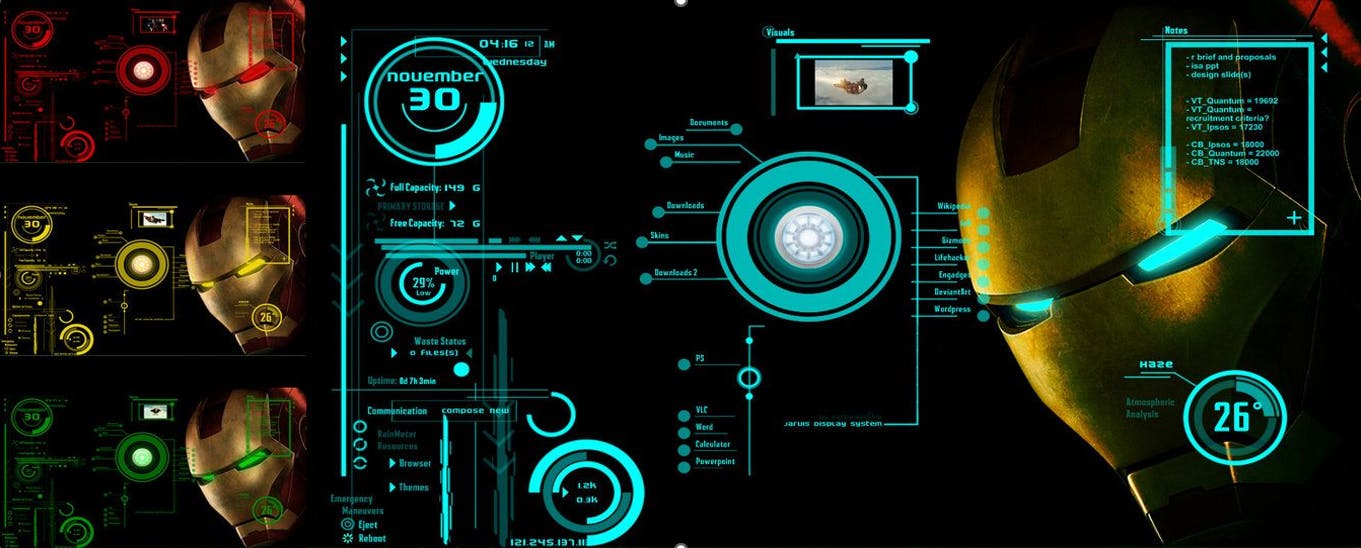

Jarvis of Marvel Universe

Well you all are familiar with Jarvis, Tony Stark's assistant who controls Iron Man's suit and hulk buster armor.

In Avengers: Age of Ultron, Jarvis seemed to be destroyed but due to his consciousness, he uses the internet to save himself and then Jarvis becomes Vision.

Vision - the future AGI.

Conclusion

Is AGI possible?

Well as of now, it seems difficult but it is possible as Elon Musk has said in one of his interviews that AGI is our future and we can’t ignore it.

AGI's main focus is to replicate the human brain and do things like a human or even better but the human brain is composed of around 100 billion neurons and each neuron can generate technically up to 5-50 messages each and processes it at the same time.

It is very hard to replicate the human brain and even if we start to do it would require a large amount of computational power and storage even if the simulated brain is built it is much slower than the actual ones.

But achieving true AGI would be the most defining moment in the history of humankind and that moment is closer each day.

Jarvis_2.O: Uses NLP to take commands and produces output

Thank You for being here! 🤞